The first step in building the tagging system is fetching the data for access. Luckily this is the purpose CKAN was designed for.

https://github.com/okfn/ckanclient is a python library to make accessing a CKAN instance easy.

For this project there are 3 stages to the data acces.

Step 1 - Finding the datasets.

For the purpose of this project the datasets to be examined have been restricted to CSV files. To restrict datasets returned by CKAN to this file format we can call the following to set the search parameters.

kan.package_search("res_format:CSV")

result_gen = search["results"]

Step 2 - Data acquisition

The gives a result generator that can be iterated through like a normal python array. Each element in this array provides not only a link to the dataset itself but also the associated Metadata.

Step 3 - Caching

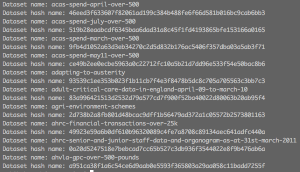

For the sake of both system efficiency and being efficient with the data providers bandwidth it is important to implement a caching system. The key for the cache is generated by sha'ing the name of the dataset.

For the sake of both system efficiency and being efficient with the data providers bandwidth it is important to implement a caching system. The key for the cache is generated by sha'ing the name of the dataset.

A folder is created in the file system with the name being the hashed value of the dataset name. Within the folder arbitrary data can be stored. Currently this includes both the actual data itself and the associated tags for the dataset. It is important that these files are named consistently for all the datasets to make access by the processing portion of the program simple.

With the caching in place the future runs of the system need only fetch the list of datasets and check to see if the hash of the dataset name is within the filesystem. It will then only download it if is not present.

With the caching in place the future runs of the system need only fetch the list of datasets and check to see if the hash of the dataset name is within the filesystem. It will then only download it if is not present.

This approach will allow for the entire collection of datasets to be obtained incrementally so to avoid putting to much continual load on a server.

With this in mind a virtual private server was provisioned this week to allow for this intentionally slowed access of the data to take place 24/7. This server will also be used in the future to do the batch processing of the datasets.

Step 4 - Data access

The data is now stored in files within a standard filesystem and so data can be accessed in the traditional manner. However it is not this simple, during testing it became apparent that even datasets that are marked as CSV files are often not actually of that form. Because of this it was necessary to implement some processing between the data and the processing system. The current implementation is quite simplistic but throws away a lot of the broken files leaving mostly valid datasets. Importantly this has been implemented in such a way that it can be improved as the project progresses but is satisfactory to begin testing.

Through observations there does appear to be a range of datasets that appear to be transposed CSVs. This could be relatively simple to programatically fix and will be experimented with in the near future to improve the amount of viable datasets.

Summary

- Dataset acquisition (including tag metadata).

- Caching system for datasets.

- Automated recreation of the cache.

- Pre-processing of the dataset to quality of data.